On New Year’s Eve 2019 at 10:00 AM EST, an artificial intelligence algorithm sent a warning out to health professionals around the globe about a serious respiratory disease affecting Wuhan, China. Besides identifying the source of the outbreak, then it used airline ticketing data to predict that the virus would likely spread to Seoul, Bangkok, Taipei, and Tokyo.

By scouring huge amounts of data including people bulletins, airline travel data, government documents, news reports, and other sources, the AI, produced by Canadian tech company BlueDot, managed to spot the outbreak of this Wuhan coronavirus prior to any other organization and issue a warning to medical bureaus across the world.

Even the World Health Organization, by comparison, didn’t difficulty their caution into the general public until nine days later.

As of writing, more than 20,000 individuals are infected with the virus, however, authorities around the world are working hard to protect against a hangover, thanks in no small part to this warning.

AI and BI: The End of Human Decision Making in Business?

It’s easy to find out why rsquo & there;excitement about AI and related fields such as machine automation and learning. Warning and the identification of this Wuhan coronavirus is a remarkable instance of the AI has to make decisions and act on those decisions quicker as well.

At the business intelligence Earth, AI is often heralded as time goes on, even a BI killer. Why draw people to the procedure if AI algorithms will make better decisions?

Augmented analytics, with AI and ML to help surface relevant info, is right around the corner, and some even claim human-out-of-the-loop AI programs are just a couple of decades away.

However, is AI likely to kill the need for BI? Can we remove humans from business decisions, and what’s more, should we? Can AI make individuals decisions that are superior than people could?

The AI Black Box

Source: actionable.co

Source: actionable.co

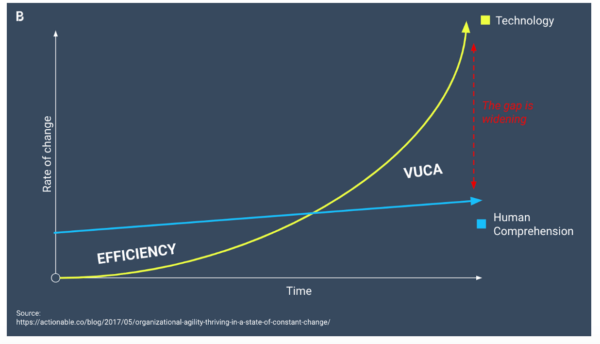

AI–and additional technical fields–are advancing rapidly, faster than people can deal with it. The technology behind modern AI such as neural networks has gotten so complicated that even AI specialists do not fully understand it.

Elon Musk formerly described the evolution of artificial intelligence as “summoning a fanatic ” — interesting phrases from the guy who wanted to create a completely automatic “alien dreadnought factory. ”

“I am quite close, I am quite near, into the cutting edge in AI and it scares the hell out of me. It’therefore capable of more than almost anyone understands, as well as the rate of advancement is exponential. ” – Elon Musk, CEO, Tesla

When humans seem to be in control, things don’t go as expected.

Recently, Facebook developed AI-powered chatbots to negotiate prices with one another. After some simple preliminary dialog, a funny thing happened: The bots began to make their language that humans couldn’t know.

When we don’t understand what AI is performing or how it makes its decisions, we don’t know who to blame if things go wrong. A billionaire investor from Hong Kong is having a salesman for compelling him to trust another organization ’s AI who dropped him 20 million dollars in poor trades. However, does the designer of the AI have some responsibility too? Is your salesman that the only one from the wrong, or is it the company that assembled the AI also to blame? Investing is risky, so is anybody really at fault?

Black-boxed AI systems not just make it difficult to draw lines of responsibility, theyrsquo;re almost impossible to troubleshoot when things go wrong. If an AI system creates or influences a decision that costs a company millions of dollars, prospective failure can’t be prevented when the steps that led up to this decision are unknown. That is proving to be a massive liability for businesses applying AI. Legislation is being set on in the UK to punish companies with multi-million pound penalties if they can’t satisfactorily explain how their AI methods make decisions.

Source: abcnews.go.com

Source: abcnews.go.com

Bias In, Bias Out

There are a number of blatant myths about artificial intelligence: that decisions made by computers are completely honest, that information is always accurate, and this AI is immune to the biases and intellectual fallibility so inherent to people.

However, the truth is that AI algorithms are as biased. AI is a multiplier and can often magnify biases that currently exist in society to extreme amounts:

Recently, AI engineering in Google was demonstrated to encourage racial bias . Looking for “rdquo & hands; yielded pictures of mostly caucasian hands– while searching for “rdquo, black hands &; resulted in mostly derogatory depictions.

Last year, some Twitter articles went viral Apple’s new charge card approval procedure of discriminating against women. Theyrsquo;re under analysis for sex discrimination.

Amazon stopped using an hiring algorithm after discovering it favored applicants that employed language such as “implemented ” or “captured”– phrases more commonly found on men’s resumes.

A criminal justice algorithm employed in Florida mislabeled African-American defendants as “high risk” at almost twice the speed it mislabeled white defendants.

Why is bias worse in AI systems than people is that this is bias set up in scale. It affects more than just one location Whenever these systems discriminate. Things can escape hand very fast, as Microsoft found out a couple of decades back.

In 2016, they created TAY (Thinking About You), an AI-powered chatbot that was designed to participate in real discussions with folks over Twitter. It sounds like a interesting idea? Just 16 hours after launch, TAY started spewing racial epithets and profanity and was taken off.

In this case, it had been a group of pranksters and a couple of nefarious characters that manipulated that the bot and intentionally caused it to go off the rails. However, AI employed for critical business decisions could fall victim to hackers, competitors engaging in endanger, or individuals bent on causing mayhem.

A Double-edged Sword

When firms employ AI systems in scale, they become goals with multiple attack vectors. What’s & rsquo;s perhaps most worrying, is that there’s a new generation of hackers that are going beyond only breaking into databases and learning how to manipulate the AI algorithms themselves. Some are even creating their own AI to help in their strikes and outsmart safety measures.

The most sophisticated AI could be duped in ways individuals may ’t. Manipulating a couple of pixels may cause AI to see a baseball where a doesn’t exist, an mistake even a toddler wouldn’t make. An artist lately created virtual ‘traffic jams’ at Berlin by pulling a wagon full of second-hand smartphones!

Source: evolving.org

Source: evolving.org

The ones who are acquainted with various AI systems could even participate in data overload, manipulating the source datasets to deceive systems and activate unnecessary or unwarranted responses. It’s a threat that’so dangerous that even the Pentagon is trying to produce countermeasures that mimic animal immune systems to fight it.

World-renowned physicist Stephen Hawking maintained AI could be our worst error . “Success in generating effective AI may be the largest event in the history of our culture. Or just the worst. So we cannot know if we will be helped by AI or disregarded by it sidelined, or conceivably destroyed by it. ”

Weaponized data or some compromised AI platform , even if just utilised in the context of BI, may handle a potentially devastating blow to an organization.

Source: popular mechanics.com

Source: popular mechanics.com

Those Pesky Black Swans

AI, at least for the conceivable future, relies on understanding. Its repertoire of data to draw from, including requirements and parameters, are based on info that was recorded and archived. The problem is in how the future is full of unknowns.

Writer Nassim Nicholas Taleb set on the concept of Black Swans: infrequent, unexpected events that possess the power to change society for better or worse. Black Swan events are teachers who directly affect and develop human comprehension . They occur frequently, nevertheless are inconsistent, recalibrating our frames of reference for the world around us.

The Fukushima disaster is a good example. The Japanese nuclear facility was built to withstand earthquakes no more than 7.9 on the richter scale since bigger quakes weren’t expected and hadn’t formerly occurred in that part of Japan. But Black Swans are inevitable. In March 2011, the largest earthquake in Japanese history (with a size of 9.0), together with an equally catastrophic tidal wave, struck the plant and unleashed the most crucial nuclear disaster since Chernobyl.

By definition, it is hard to deal with the unexpected and impossible to expect. Humans, however, have demonstrated themselves to be the very flexible species ever . Everything on the planet appears bent on killing us, yet we continue to flourish. Is it even possible to instill that adaptability to our AI creations? Likely not.

Black Swans present a problem for artificial intelligence. AI systems are notoriously poor in handling abrupt fluctuations in factors that are unanticipated or an environment. The AI reaction to unexpected events could be wrong –and dangerous.

We saw an example of this with all the Viking Sky cruise boat catastrophe that happened last year. Rough seas generated oil to slosh about in the tanks, so inducing onboard sensors to indicate there was no oil. The automatic system then triggered an entire shutdown of those engines. A atmosphere rescue effort needed to be started to bring 479 passengers and crew members to security.

Favorable Black Swans — such as tendencies or discoveries– present an scenario for AI. It’s unlikely that AI would be able to capitalize on those opportunities as well as humans can.

Source: vanityfair.com

Source: vanityfair.com

Can an AI strategy have developed a character as popular as Baby Yoda?

Would AI employed by Puma have realized paying the world’s best soccer player to tie his shoes during the center of this World Cup was a great way to obtain significant publicity?

Could it have made sense for almost some AI strategy to bring back Steve Jobs to spend the helm of Apple after having terminated years earlier?

It turns out that people are very good at making decisions that don & rsquo; t align with evidence, logic, or common sense and handling the unexpected. The insights that only humans can endure through business intelligence holds tangible value for businesses that may ’t be overestimated.

The Power and Brilliance of Human Curiosity

Each firm has access to one of rsquo & those earth;s tools that are most powerful. However, not many extract its whole worth: individual capital or the skills, wisdom, and experience s workers that are human.

Cultivating creativity and fascination within their workforces results in quantifiable gains for businesses. Organizations scoring highest at McKinsey’s Creativity Score performed better compared to peers in virtually every category:

67 percent had above-average organic earnings growth

70% had above-average total return to shareholders (TRS)

74 percent had above-average net enterprise value or NEV/forward EBITDA

The data is clear: Creativity pushes innovation and earnings growth. And study demonstrates that this isn’t limited to a few people. In actuality, outcomes are improved by incorporating more humans into the mixture and increasing diversity farther.

Diverse, inclusive teams make improved business decisions 87% of their time. They are more advanced , examine truth more carefully , and therefore so are much more innovative .

Source: americanscientist.org

Source: americanscientist.org

Creativity is something AI still has yet to learn. It may mimic human creativity quite well, however it may ’t think metaphorically or integrate outside circumstance . We’t trained AI to create paintings that seem like Picassos and write music that sounds like Schoenberg’so, however the results are book, not creative. Function differs from book work in a way: significance. AI is good at assessing what is, discovering patterns, and replicating those routines but it still can’t create anything genuinely new and meaningful.

Steve Jobs defined creativity as “… simply connecting things. When you ask creative people how they did something, they feel a little guilty since they didn&rsquo they just saw a thing. It seemed clear to them. ”

Our previous experiences, even (and maybe especially) those out of our particular functions or industries, give us more creative strands we could attempt tying together. And bringing more humans to the conversation, each having had their wide selection of experiences, increases a company ’s power .

Many advancements in BI abstracting or are limiting humans from the decision-making procedure. Like our muscles, even in case people don’t practice our choice making skills, they atrophy. It’s affecting some professions. An over reliance on automatic systems has resulted in a production of pilots who may ’t fly very well. Replacing BI decision making with AI algorithms will have a similar effect.

As we a species ’ve experienced eons to exercise and refine our decision making abilities. AI and ML systems that are trained for months or weeks don ’t possess the huge sums of context and experience people. And people bring certain items to the decision making table AI never will, qualities such as empathy, morality, risk-taking, altruism, and integrity.

It’s true that in the past few decades, there have been a number of attempts to imbue AI methods together with codes of ethics. But whose integrity? Values and ethics differ from culture to culture. Ethics may also alter over the years . Who’s responsible for updating those AI approaches to change along with them?

Google defines ‘intelligence’ as the ability to acquire and apply knowledge and skills. Knowledge comes in several types. Explicit knowledge such as facts and data can easily be transferable, but our insights stem from our tacit understanding that is gathered. All these are items we might not even recognize we understand and are much harder to codify and pronounce convert to an algorithm.

Harder still is the characteristic of intellect. It’s a fuzzy word with all kinds of connotations. While there are many different opinions on the specific definition of the period, almost everybody agrees it’therefore a grade gained through life experience (i.e., residing ). AI systems, however complex, are just working with information. Their lsquo;expertise ’ could barely be defined as ‘alive. ’ Whether or not the AI system may truly be sensible is up for discussion.

Source: digitalengineering247.com

Source: digitalengineering247.com

Lastly, among the wonderful advantages of AI decision making, too little emotion, is also its Achilles heel. The best decisions aren’t always logical ones. People who have sustained damage to the pieces of their brains that are responsible for aggression make poorer decisions across multiple areas of their own lives. Science is only just beginning to understand the role emotions play in decision making, even business ones.

Bring More People Into the Data Conversation

AI is going to have a place in business intelligence and within our own lives generally. Advancements, for example augmented analytics, could continue to improve at surfacing insights that are relevant into individuals in order that they could make the decisions. AI will permit us compel us to redefine our functions because it handles all these mechanical or tedious parts of our tasks and to concentrate on work. These are advancements, and we’re so keen to see them develop further. But there’s a massive opportunity at the moment for businesses gain from the irrational, imperfect, and beautiful power of fascination and to receive the full value. The key is lowering the technical barrier and allow domain experts to take part in the data dialog.

By sharing a common language can firms extract the most impactful data insights. This method is exactly the mission Sigma has chosen to tackle.

Would you like to learn how you can empower all of your visitors?

(This post originally appeared on sigmacomputing.com)

Article Source and Credit feedproxy.google.com http://feedproxy.google.com/~r/B2CMarketingInsider/~3/rFfEUAytHL8/business-intelligence-doesnt-need-more-ai-it-needs-more-humans-02282393 Buy Tickets for every event – Sports, Concerts, Festivals and more buytickets.com

Leave a Reply

You must be logged in to post a comment.