Automakers like Audi, BMW, GM’s Cadillac, Nissan and Tesla have advanced driver assistance systems that are robust enough to take the sting out of that daily highway commute by handling some driving tasks. The problem: Drivers don’t understand the limitations of these systems, according to two new studies released Thursday by Insurance Institute for Highway Safety.

Confusion around advanced driver assistance system is prevalent, and particularly so when it comes to what Tesla’s Autopilot system can and can’t do, according to one IIHS study.

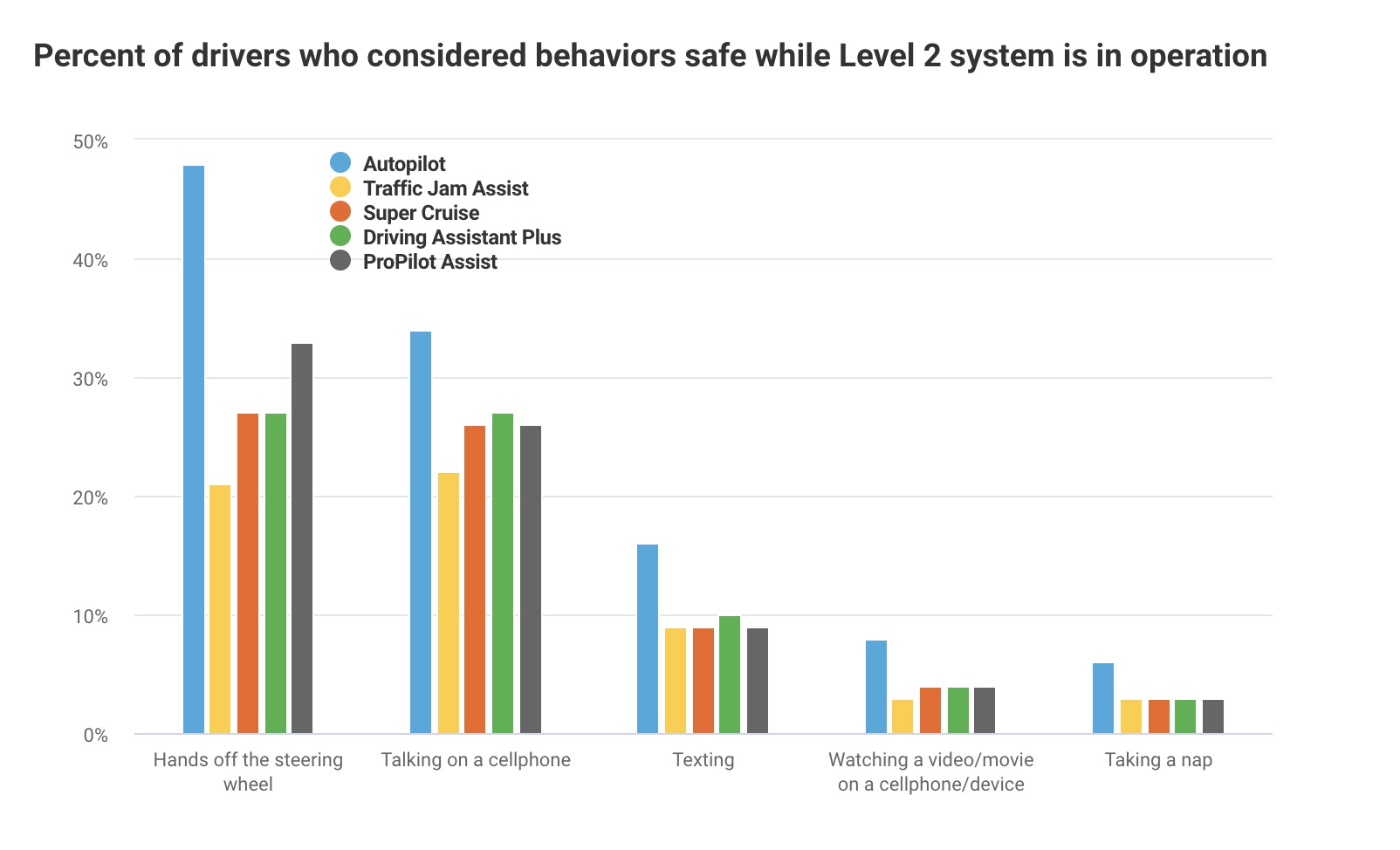

The perception survey asked 2,005 people about five “Level 2” driving automation systems that are available in vehicles today, including Tesla’s Autopilot, Traffic Jam Assist in Audi and Acura vehicles, Cadillac’s Super Cruise, BMW’s Driving Assistant Plus and Nissan’s ProPilot Assist. Level 2 means the system can perform two or more parts of the driving task under supervision of the driver. For instance, the system can keep the car centered in a lane and have adaptive cruise control engaged at the same time.

Some 48% people surveyed said it would be safe to take their hands off the wheel while using Autopilot compared to 33% or less for other systems included in IIHS’s survey. Autopilot also had substantially greater proportions of people who thought it would be safe to look at scenery, read a book, talk on a cellphone or text, IIHS said. And 6% thought it would be OK to take a nap while using Autopilot, compared with 3% for the other systems.

This survey was general by design. It wasn’t a survey of Tesla owners, people who would presumably have a better understanding of how Autopilot worked. And it’s in fact the argument that Tesla made in response to this study.

“This survey is not representative of the perceptions of Tesla owners or people who have experience using Autopilot, and it would be inaccurate to suggest as much,” Tesla said. “If IIHS is opposed to the name “Autopilot,” presumably they are equally opposed to the name “Automobile.”

The company continued that it provides owners with clear guidance on how to properly use Autopilot, as well as in-car instructions before they use the system and while the feature is in use. If a Tesla vehicle detects that a driver is not engaged while Autopilot is in use, the driver is prohibited from using it for that drive.

Even if one were to ignore the cornucopia of YouTube videos showing owners abusing or misusing Tesla Autopilot, the “training” argument doesn’t quite stand up to the results of IIHS’s other study. The second study focused on the effects of training and whether drivers understand Level 2 automation information from the display of a 2017 Mercedes-Benz E-Class with the Drive Pilot system.

IIHS used the E-Class display for its study because it says it’s typical of displays from other automakers. It would have been interesting to do the same study using the Tesla display, which is significantly different.

Eighty volunteers viewed videos recorded from the driver’s perspective behind the wheel of the E-Class. Half received some training that included a brief orientation about the instrument cluster icons pertaining to the two systems.

The training didn’t help. Most struggled to understand what was happening when the system didn’t detect a vehicle ahead because it was initially beyond the range of detection, IIHS said.

The upshot? Driver assistance systems are becoming more common and capable and drivers are not keeping up with the changes. That’s a dangerous combination.

More bluntly, people are clueless when it comes to how advanced driver assistance systems (ADAS) work and what they can and can’t do. ADAS is not autonomy. And there are no self-driving production vehicles on the road today, despite what some media outlets and companies claim.

Buy Tickets for every event – Sports, Concerts, Festivals and more buy tickets

Leave a Reply

You must be logged in to post a comment.